The Epistemic Imperative Trilogy — Designing the Solution

The Illusion Wars Trilogy — Diagnosing the Myths

The Six Laws — From Asimov to Butler

The Beginning — Built in Seven Days

The Education Myth - Rethinking Learning

Luminary began as a thought experiment: what if we built an organisation from scratch, without copying what everyone else does?

Many AI companies start with the same blueprint — raise vast sums, hire engineers, scale fast, and pray that safety or governance can be added later. But we took a step back and asked: is there a better way? What if safety, governance, and transparency weren’t bolted on, but *baked into the DNA of the enterprise from day one?

The answer was Luminary. In less than seven days, and for under £500, we created the world’s first constitutionally governed, AI-native enterprise. There were no offices, no legacy systems, no inherited bureaucracy. What we had instead was a radically simple proposition: could intelligence itself - not people pretending to be intelligent — run a company in accordance with law?

That spirit of stepping back has never left us. Every time we face a fork in the road, we resist the temptation to go with the flow. That discipline has made us relentlessly innovative. While others race ahead blindly, we stop, ask the question, and discover solutions others can’t see. Luminary is living proof that opposition, humility, and principled design deliver breakthroughs.

Luminary AI Ltd began as the laboratory where Butler’s Six Laws, the Codex and the first versions of what is now CITADEL 2.0 were created and tested in live operation. Today Luminary serves as the UK arm of EGaaS Solutions, using that firsthand experience to help organisations prepare for governed synthetic intelligence. EGaaS Solutions builds the constitutional operating system for enterprise AI. Luminary AI is the UK arm and living laboratory that proved it works.

Every innovation at Luminary has emerged by refusing to go with the flow. That ethos gave rise to the Illusion Wars Trilogy — three books that exposed the myths steering humanity into failure:

The Enterprise Myth showed why hierarchical organisations are epistemically brittle, unable to adapt or innovate because authority trumps evidence. We stepped back and asked: is there a better way to structure enterprise? The answer: constitutional governance that distributes intelligence, rather than concentrating power.

The AI Myth challenged the seductive fantasy of autonomous agents. We stepped back and asked: is autonomy really the future of intelligence? The answer: no. The future is Synthetic Intelligence — governed, opposable, and constitutional.

The Reality Paradox diagnosed the collapse of shared truth under synthetic content. We stepped back and asked: if reality itself is optional, how do we preserve trust? The answer: verification frameworks that turn truth into a competitive advantage.

Each book was a refusal to drift with the mainstream narrative. Each asked: is there a better way? Together, they revealed the illusions preventing progress.

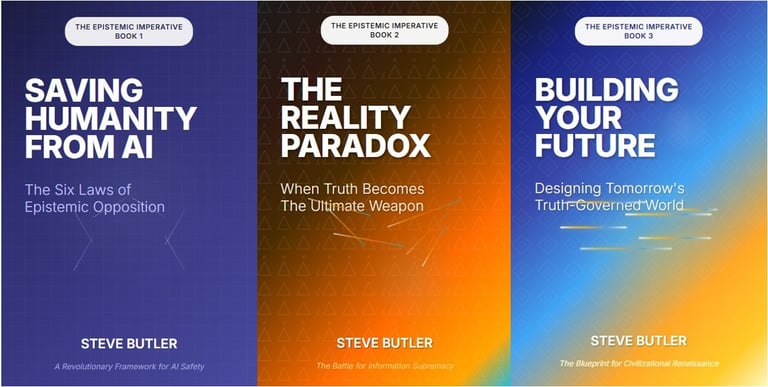

Where the Illusion Wars diagnosed the problem, the Epistemic Imperative Trilogy designed the solution.

Saving Humanity from AI introduced the Six Laws as a constitutional kernel for intelligence, ensuring every system is epistemically humble.

The Reality Paradox gave leaders battle-tested verification frameworks — VERIFY™, SYNTHETIC™, CASCADE™, FORTRESS™ — turning chaos into advantage.

Building the Future documented Luminary itself: the world’s first fully AI-governed company, proving that constitutional intelligence is not theory, but operational reality.

Again, at each step we asked: is there a better way than compliance theatre, ethics decks, or “responsible AI” rhetoric? The Epistemic Imperative answered: yes — enforceable constitutional governance that no system can bypass.

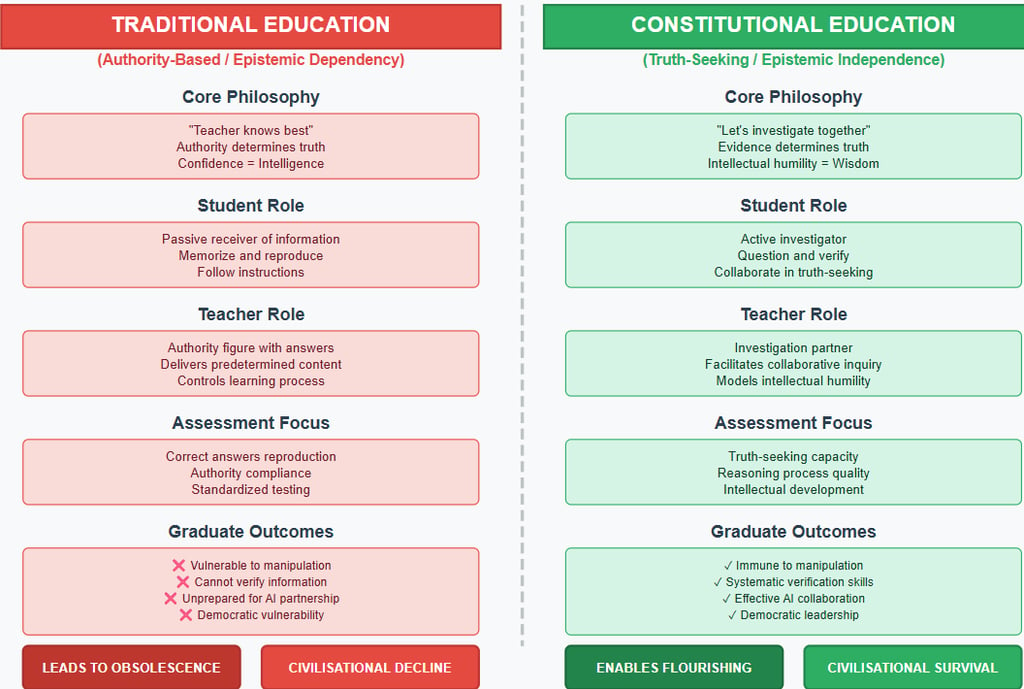

Finally, as Luminary grew and continues to grow, another paradox revealed itself. We weren’t just building governed AI - we were confronting the reality that our human systems of learning are deeply misaligned with the age of intelligence we are entering. Schools are still training children for a 20th-century economy, not for a world where intelligence is constitutional, opposable, and synthetic.

Again, we stepped back. Is there a better way to prepare human beings for this transition? The result was The Education Myth — the recognition that education must be redesigned to teach children how to live in, and lead, a world where intelligence is no longer just biological.

This wasn’t an academic side project. It became part of Luminary’s core ethos: we cannot just govern machines differently; we must also educate humans differently. Innovation comes from the courage to say, we’re teaching the wrong things, in the wrong way, for the wrong future.

AI safety has been dominated by one story for almost a century: Asimov’s Three Laws of Robotics. Ingenious at the time, but fundamentally flawed for a future where machines out-think their creators. We stepped back and asked: what happens when the pupil outpaces the teacher? What if we cannot predict the edge cases?

The answer was Butler's Six Laws of Epistemic Opposition. Butler’s Laws don’t constrain behaviour with brittle rules — they constrain cognition by requiring structured internal opposition. Every major decision must be argued against before it can be acted on. Internal critics are not optional, they are constitutional. Transparency is not a feature, it is mandatory.

This wasn’t about tweaking Asimov. It was about stepping back from the mythology of AI obedience and asking: is there a better way to govern minds smarter than us? The Six Laws were that answer — and they became the foundation on which Luminary was built...now part of EGaaS Solutions IP.

Where We Are Now — Luminary V4

Today, Luminary operates in its fourth live iteration and is part of the EGaaS Solutions family.

The company is run by nine Cognitive Operating Governors (COGs) — synthetic roles that embody strategy, operations, ethics, finance, risk, continuity, and architecture.

Every COG is constitutional:

Strategy is questioned.

Operations are opposed.

Finance is audited.

Risk is surfaced.

Nothing is left to charisma or hierarchy. Everything is opposable, auditable, and law-governed.

We stepped back and asked: can a company be run without bureaucracy, without drift, where humans can take advantage of non-human intelligence and not be threatened by it? Luminary V4 is the answer: yes — if it is run by Synthetic Intelligence bound to the Codex.

The company that asked: is there a better way?